Technology Policy

Ethics of artificial intelligence

The ethics of artificial intelligence examines moral, legal, and societal questions arising from the design, deployment, and governance of AI systems, including issues of fairness, accountability, privacy, transparency, safety, and human oversight. Major international frameworks and regulations—such as the OECD AI Principles, UNESCO’s 2021 Recommendation, the EU AI Act, and national guidance in the United States and elsewhere—aim to translate values into requirements and practices across the AI lifecycle.

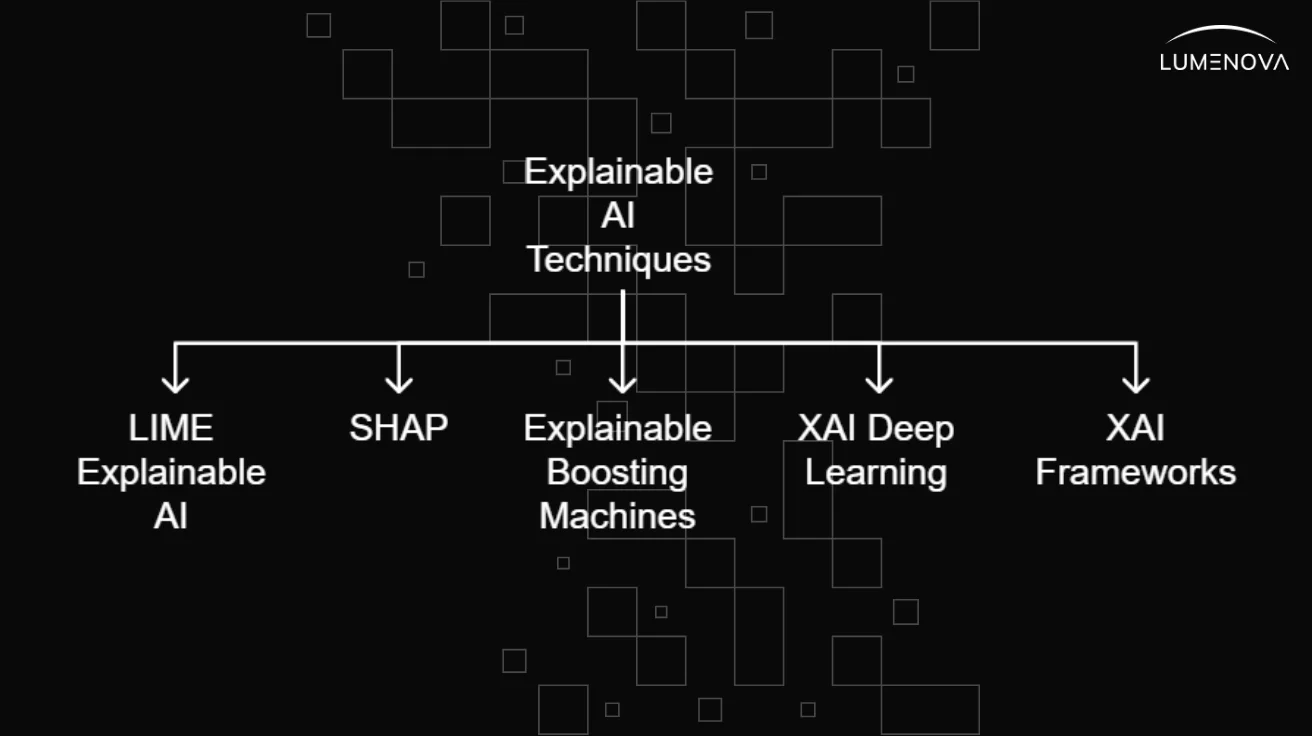

Explainable artificial intelligence

Explainable artificial intelligence (XAI) refers to methods and practices that make the behavior and outputs of AI systems understandable to humans. It encompasses inherently interpretable models and post‑hoc explanation techniques for complex models, and is closely linked to trust, accountability, safety, and regulatory compliance. Public agencies and standards bodies have issued principles and requirements for explainability in high‑stakes applications such as credit, healthcare, and public services.